However, almost a fifth of firms (17%) have yet to decide where oversight responsibility resides, leading to a lack of clarity on a single ‘best practice’ approach on which functions and teams should be involved.

The Risk Management Considerations for Generative AI Report from ORX, the world’s largest operational risk association, suggests that the conversation is shifting away from whether to allow the use of these tools, and more towards the potential benefits. This is echoed in the report with 75% of firms saying that they have an AI use policy in place or are planning to put one in place within the next 18 months. However, it seems that more needs to be done within some firms to effectively communicate these policies.

Steve Bishop, Research and Information Director at ORX comments, “Only last October (2023), the picture regarding the use of GenAI in daily operations was very different, with many banks and insurers taking a cautious approach or even blocking access, mainly to ChatGPT. Since then, we’ve seen a slight shift and some firms have been using pilot exercises to explore the value of these tools and understanding use cases.

“Microsoft Copilot features highly, with some firms deploying it across the whole organisation. This is perhaps due in part to the fact that Copilot is integrated with the Microsoft ecosystem that many firms use.”

Most of the surveyed firms are currently applying a cross-functional approach to ensure adequate oversight and management of risks driven by GenAI. There is a recognition among respondents that these risks are still evolving and ensuring input from a variety of risk professionals and stakeholders helps to better understand and manage these. Overall, 75% of firms say that oversight of risks associated with GenAI lies with operational risk functions, but in partnership with other functions, such as technology, cyber, legal, and compliance teams.

Steve Bishop adds, “Financial firms are trying to manage the balance between the potential of GenAI to innovate and improve efficiencies and the risks its use can bring. They are now trying to make sure they have robust oversight and governance in place.

“From our study and discussions with our communities, it seems likely that firms will take a cross-functional approach to managing these risks for the foreseeable future. The means involving a variety of relevant risk functions and stakeholders. The majority are also considering how they will scale this oversight approach to meet the expected increase in use.”

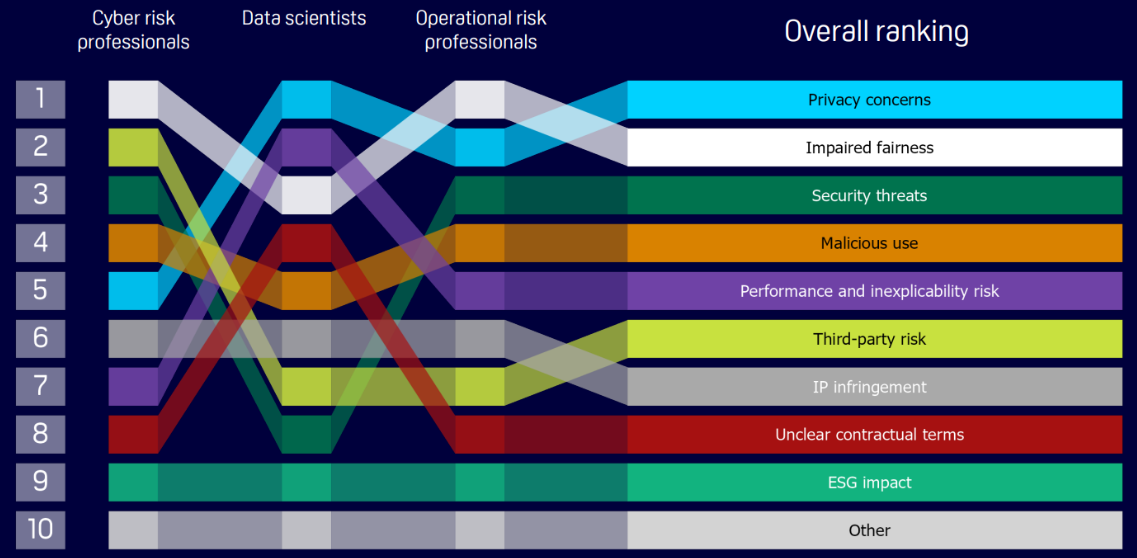

The main risk concern of GenAI identified by respondents in the report was privacy, the unauthorised use or disclosure of personal or sensitive information, followed by impaired fairness and security threats. Despite media profile regarding the ESG impacts of GenAI models, firms are least concerned with these impacts. It is expected that this will change over time as the adoption of these technologies accelerates and becomes more embedded into business processes.